Netty参数配置优化

目录

netty优化

-

客户端通过option方法配置参数

-

服务器端:

new ServerBootstrap().option():是给ServerSocketChannel配置参数new ServerBootstrap().childOption(): 给SocketChannel配置参数

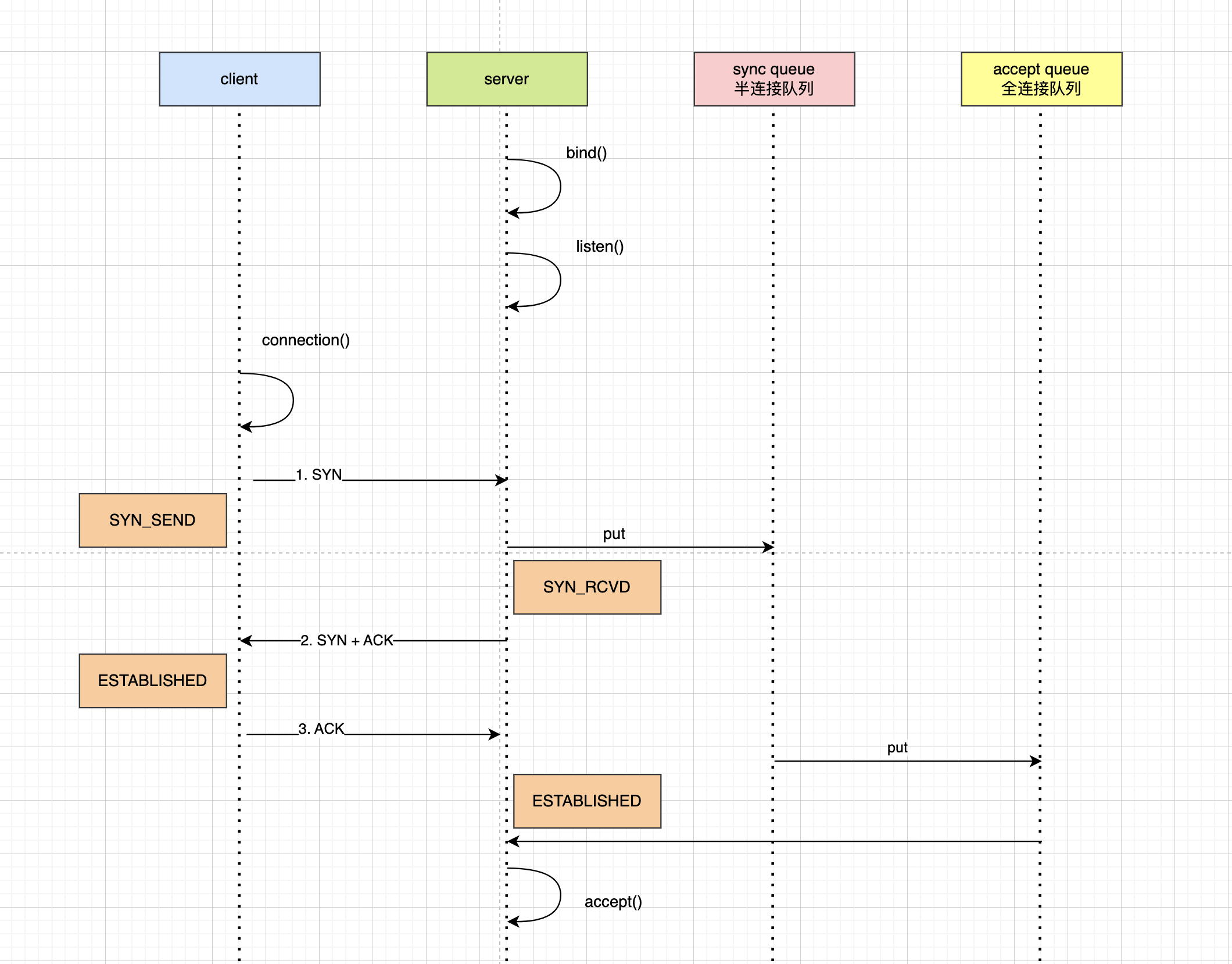

TCP 三次连接示意图

-

第一次握手,

client发送SYN到server,状态修改为SYN_SEND,server收到后将状态改为SYN_RCVD,并将请求放入sync queue队列中 -

第二次握手,

server回复SYN+ACK给client,client收到以后,状态变为ESTABLISHED,并发送ACK给server -

第三次握手,

server收到ACK状态变为ESTABLISHED,并将连接从sync queue中移到accept queue

- 在Linux2.2之前,backlog大小包含了两个队列的大小,在2.2之后分别用两个参数来控制

- sync queue:半连接队列

- 大小通过

/pro/sys/net/ipv4/tcp_max_syn_backlog指定,在syncookies启用的情况下,逻辑上没有最大限制。

- 大小通过

- accept queue:全连接队列

- 大小通过

/pro/sys/net/core/somaxcoon指定,在使用listen函数时,内核会根据传入的backlog参数与系统参数比较,取二则的较小值 - 如果

accept queue队列满了,server将发送一个拒绝连接的错误信息到client - netty中可以通过

option(ChannelOption.SO_BACKLOG, value)来设置大小

- 大小通过

- sync queue:半连接队列

客户端配置 CONNECT_TIMEOUT_MILLIS

客户端超时连接配置

public class TestConnectionException {

public static void main(String[] args) {

NioEventLoopGroup eventLoopGroup = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap()

.group(eventLoopGroup)

.option(ChannelOption.CONNECT_TIMEOUT_MILLIS, 1000)

.channel(NioSocketChannel.class)

.handler(new LoggingHandler(LogLevel.DEBUG));

ChannelFuture future = bootstrap.connect("localhost", 8000);

future.sync().channel().closeFuture().sync();

} catch (InterruptedException e) {

log.debug("连接超时");

throw new RuntimeException(e);

}finally {

eventLoopGroup.shutdownGracefully();

}

}

}服务端配置backlog参数

public class TestBackLogServer {

public static void main(String[] args) {

try {

ChannelFuture channelFuture = new ServerBootstrap()

.group(new NioEventLoopGroup())

.channel(NioServerSocketChannel.class)

// 配置最大连接数

.option(ChannelOption.SO_BACKLOG, 2)

.childHandler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new LoggingHandler());

}

}).bind(8000).sync();

channelFuture.channel().closeFuture().sync();

}catch (Exception e){

}

}

}@Slf4j

public class TestBackLogClient {

public static void main(String[] args) {

try {

ChannelFuture channelFuture = new Bootstrap()

.group(new NioEventLoopGroup())

.channel(NioSocketChannel.class)

.handler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new LoggingHandler());

}

}).connect("localhost", 8000);

channelFuture.sync();

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

log.error("client connect error:", e);

}

}

}以debug方式启动服务端,并在NioEventLoop.java:721 打上断点

。。。。。。

if ((readyOps & (SelectionKey.OP_READ | SelectionKey.OP_ACCEPT)) != 0 || readyOps == 0) {

unsafe.read();

}

。。。。。。打开三个客户端,在第三个客户端控制会报错:

Exception in thread "main" io.netty.channel.AbstractChannel$AnnotatedConnectException: Operation timed out: localhost/127.0.0.1:8000

Caused by: java.net.ConnectException: Operation timed out

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:715)

at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:337)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:334)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:710)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:658)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:584)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:496)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:995)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:750)

19:22:32 [DEBUG] [nioEventLoopGroup-2-1] i.n.h.l.LoggingHandler - [id: 0xdc97fc3e] CLOSE==backlog默认值:windows系统是200,其他操作系统是128==

ulimit -n [value]

属于操作系统参数,系统可打开的文件数量

TCP_NODELAY

属于SocketChannel参数,将小的数据包合并到一起发送,会有延迟,netty默认是开启了nagle算法,所以一般会关闭这个算法。

ChannelFuture channelFuture = new ServerBootstrap()

.group(new NioEventLoopGroup())

.channel(NioServerSocketChannel.class)

// 配置最大连接数

.option(ChannelOption.SO_BACKLOG, 2)

.childOption(ChannelOption.TCP_NODELAY, true)

.childHandler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

ch.pipeline().addLast(new LoggingHandler());

}

}).bind(8000).sync();

channelFuture.channel().closeFuture().sync();SO_SNDBUF & SO_RCVBUF

SO_SNDBUF属于SocketChannel参数SO_RCVBUF既可以用于SocketChannel参数,也可以用于ServerSocketChannel参数(建议设置到ServerSocketChannel上)

==netty会根据系统适配参数,一般不需要我们调整==

ALLOCATOR

属于SocketChannel参数,用来分配ByteBuf,(ctx.alloc()),可以配置ByteBuf是否池化,是否堆内存等相关信息

io.netty.channel.DefaultChannelConfig

通过系统参数来配置:

# pooled/unpooled

-Dio.netty.allocator.type=pooled

# 是否不使用直接内存(物理内存)为true表示使用堆内存

-Dio.netty.noPreferDirect=trueRCVBUF_ALLOCATOR

属于SocketChannel参数,负责入站数据的分配,决定入站缓冲区的大小(支持动态调整),统一采用direct直接内存,具体池化还是非池化由allocator决定