Docker-Compose部署Elk

docker 安装ELK

1.下载项目

git clone https://github.com/deviantony/docker-elk.git2. 启动服务

docker-compose up -d3. 重置密码

docker-compose exec -T elasticsearch bin/elasticsearch-setup-passwords auto --batch输出如下:

Changed password for user apm_system

PASSWORD apm_system = m4TeQ4gEMJw3X5xaMv4B

Changed password for user kibana_system

PASSWORD kibana_system = RcBkkUCKRtNboXVm7NWO

Changed password for user kibana

PASSWORD kibana = RcBkkUCKRtNboXVm7NWO

Changed password for user logstash_system

PASSWORD logstash_system = WHbRnZhklNZf5apfOw2K

Changed password for user beats_system

PASSWORD beats_system = aRBr1Q8diQHAcHq8LGMn

Changed password for user remote_monitoring_user

PASSWORD remote_monitoring_user = XPfmZHbB7lnEsInKdSTC

Changed password for user elastic

PASSWORD elastic = DSLWlX5rOhFe5ta7Rd2i4. 将密码妥善保存后,我们需要将 docker-compose.yml 配置文件中的 elasticsearch 服务的 ELASTIC_PASSWORD 去掉,这样可以确保服务启动只使用我们刚刚重置后的密码(keystore)。以及需要对 kibana 、 logstash 配置文件中的信息进行替换,将文件中的 elastic 用户的密码进行更新,相关文件我们在开篇的目录结构中有提过,暂时先修改下面三个文件就可以了:

- kibana/config/kibana.yml

- logstash/config/logstash.yml

- logstash/pipeline/logstash.conf

新方式

version: "3.7"

services:

# elk日志套件(镜像版本最好保持一致) ##########

elasticsearch:

container_name: elasticsearch

image: elasticsearch:7.17.5

restart: on-failure

ports:

- "9200:9200"

- "9300:9300"

environment:

#单节点设置

- discovery.type=single-node

#锁住内存 提高性能

- bootstrap.memory_lock=true

#设置启动内存大小 默认内存/最大内存

- "ES_JAVA_OPTS=-Xms256m -Xmx256m"

ulimits:

memlock:

soft: -1

hard: -1

volumes: #挂载文件

- ./elasticsearch/data:/usr/share/elasticsearch/data

- ./elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./elasticsearch/logs:/usr/share/elasticsearch/logs

networks:

- elknetwork

kibana:

container_name: kibana

image: kibana:7.17.5

depends_on:

- elasticsearch

restart: on-failure

ports:

- "5601:5601"

#volumes:

#- ./kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- elknetwork

logstash:

container_name: logstash

image: logstash:7.17.5

depends_on:

- elasticsearch

restart: on-failure

ports:

- "9600:9600"

- "5044:5044"

volumes: #logstash.conf日志处理配置文件

- ./logstash/data/:/usr/share/logstash/data

- ./logstash/conf/:/usr/share/logstash/conf/

- ./logstash/pipeline:/usr/share/logstash/pipeline

networks:

- elknetwork

filebeat:

container_name: filebeat

image: elastic/filebeat:7.17.5

depends_on:

- elasticsearch

- logstash

- kibana

restart: on-failure

volumes:

- ./filebeat/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml

networks:

- elknetwork

#使用自定义的网桥名字

networks:

elknetwork:

external: true 创建目录:

mkdir -p elasticsearch/{conf,data,logs,plugins} kibana/conf logstash/{conf,data,pipeline} filebeat/{conf,logs}

chmod 777 elasticsearch/{conf,data} logstash/{conf,data,pipeline}./elasticsearch/conf/elasticsearch.yml

#主机ip

network.host: 0.0.0.0

#network.bind_host: 0.0.0.0

#cluster.routing.allocation.disk.threshold_enabled: false

#node.name: es-master

#node.master: true

#node.data: true

#允许跨域,集群需要设置

http.cors.enabled: true

#跨域设置

http.cors.allow-origin: "*"

#http.port: 9200

#transport.tcp.port: 9300./kibana/conf/kibana.yml

server.name: "kibana"

server.host: "0.0.0.0"

# 注意你的本地IP

elasticsearch.hosts: ["http://elasticsearch:9200"]

i18n.locale: "zh-CN"./logstash/conf/logstash.yml

# 允许通过本机所有IP访问

http.host: "0.0.0.0"

# 指定使用管道ID

xpack.management.pipeline.id: ["main"]./logstash/conf/jvm.options

-Xmx128m

-Xms128m添加管道ID和管道配置文件目录映射,注意符号 - 前后都要有空格(巨坑)

./logstash/conf/pipelines.yml

- pipeline.id: main

path.config: "/usr/share/logstash/pipeline"添 微服务应用日志管道配置,在上面指定了管道配置容器目录 /usr/share/logstash/pipeline , 后面启动 Logstash 时会将其挂载至宿主机目录 ./logstash/pipeline,接下来只要在宿主机目录下添加管道配置文件 youlai-log.config 就可以被 Logstash 自动加载生效。

./logstash/pipeline/elk-log.config

input {

beats {

port => 5044

client_inactivity_timeout => 36000

}

}

filter {

mutate {

remove_field => ["@version"]

remove_field => ["tags"]

}

}

output {

if [appname] == "gateway" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "gateway-log"

}

}else if [appname] == "auth" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "auth-log"

}

}else if [appname] == "adm" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "adm-log"

}

}else if [appname] == "project" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "project-log"

}

}else if [appname] == "product" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "product-log"

}

}

stdout {}

}在日志收集服务器上面部署 filebeat

创建目录:

mkdir ./{conf,logs}

chmod 777 ./confdocker-compose.yml

version: "3.7"

services:

filebeat:

container_name: filebeat

image: elastic/filebeat:7.17.5

restart: on-failure

volumes:

- ./filebeat/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml

- /usr/local/springboot:/logs

environment:

--log-driver: json-file注意:/usr/local/springboot 是运用服务器收集日志的文件夹

.filebeat/conf/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /logs/gateway/*.log

fields:

appname: gateway # 自定义字段,提供给 Logstash 用于区分日志来源

fields_under_root: true # 开启自定义字段

- type: log

enabled: true

paths:

- /logs/auth/*.log

fields:

appname: auth

fields_under_root: true

processors:

- drop_fields:

fields: ["log","input","host","agent","ecs"] # 过滤不需要的字段

output.logstash:

#公网ip

hosts: ['139.155.xxx.xxx:5044']filebeat.inputs:

- type: log

enabled: true

paths:

- /logs/gateway/gateway.log

fields:

appname: gateway

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/auth/auth.log

fields:

appname: auth

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/adm/adm.log

fields:

appname: adm

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/product/product.log

fields:

appname: product

fields_under_root: true

processors:

- drop_fields:

fields: ["log","input","host","agent","ecs"]

output.logstash:

hosts: ['139.155.xxx.xxx:5044']服务器配置:

| 服务器 | 描述 |

|---|---|

| 139.155.xxx.xxx | 部署ELK |

| 47.94.xxx.xxx | 部署FileBeat + 运用日志(日志收集 在哪台服务器,FileBeat 就部署在哪台服务器) |

139.155.xxx.xxx 先创建目录:

mkdir -p /usr/local/docker/elk

cd /usr/local/docker/elk

mkdir -p elasticsearch/{conf,data,logs,plugins/ik} kibana/conf logstash/{conf,data,pipeline}

# 需要给文件设置权限,否则启动报错(无权限)

chmod 777 elasticsearch/{conf,data} logstash/{conf,data,pipeline}创建docker network

docker network create elknetwork查看已创建的docker network

docker network ls创建 docker-compose.yml 文件

version: "3.7"

services:

# elk日志套件(镜像版本最好保持一致)

elasticsearch:

container_name: elasticsearch

image: elasticsearch:7.17.5

restart: on-failure

ports:

- "9200:9200"

- "9300:9300"

environment:

#单节点设置

- discovery.type=single-node

#锁住内存 提高性能

- bootstrap.memory_lock=true

#设置启动内存大小 默认内存/最大内存

- "ES_JAVA_OPTS=-Xms256m -Xmx256m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

#挂载数据文件

- ./elasticsearch/data:/usr/share/elasticsearch/data

# 挂载配置文件

- ./elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

# 挂载IK中文分词器

- ./elasticsearch/plugins/ik:/usr/share/elasticsearch/plugins/ik

networks:

- elknetwork

kibana:

container_name: kibana

image: kibana:7.17.5

depends_on:

- elasticsearch

restart: on-failure

ports:

- "5601:5601"

volumes:

- ./kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- elknetwork

logstash:

container_name: logstash

image: logstash:7.17.5

depends_on:

- elasticsearch

restart: on-failure

ports:

- "9600:9600"

- "5044:5044"

volumes:

- ./logstash/data/:/usr/share/logstash/data

- ./logstash/conf/:/usr/share/logstash/conf/

- ./logstash/pipeline:/usr/share/logstash/pipeline

networks:

- elknetwork

#使用自定义的网桥名字

networks:

elknetwork:

external: true 编写各个配置文件:

./elasticsearch/conf/elasticsearch.yml

#主机ip 允许所有IP访问

network.host: 0.0.0.0

node.name: "elasticsearch"

##允许跨域,集群需要设置

http.cors.enabled: true

##跨域设置

http.cors.allow-origin: "*" ./kibana/conf/kibana.yml

server.name: "kibana"

server.host: "0.0.0.0"

# 注意你的本地IP(我们在elasticsearch.yml中指定了 node.name: "elasticsearch" 也可以使用服务名:elasticsearch)

elasticsearch.hosts: ["http://elasticsearch:9200"]

# 汉化kibana(英语ok的忽略)

i18n.locale: "zh-CN"./logstash/conf/logstash.yml

# 允许通过本机所有IP访问

http.host: "0.0.0.0"

# 指定使用管道ID

xpack.management.pipeline.id: ["main"]./logstash/conf/jvm.options

-Xmx128m

-Xms128m这个地方如果生产服务资源够多,也可以设置大一点或则使用默认的

添加管道ID和管道配置文件目录映射,注意符号 - 前后 都要有空格(巨坑)

./logstash/conf/pipelines.yml

- pipeline.id: main

path.config: "/usr/share/logstash/pipeline"添加 微服务应用日志管道配置,在上面(pipelines.yml)指定了管道配置容器目录 /usr/share/logstash/pipeline , 后面启动 Logstash 时会将其挂载至宿主机目录 ./logstash/pipeline,接下来只要在宿主机目录下添加管道配置文件 elk-log.config 就可以被 Logstash 自动加载生效。

./logstash/pipeline/elk-log.config

注意该文件不是在 ./logstash/conf 目录下,在 ./logstash/pipeline 目录下

input {

beats {

port => 5044

client_inactivity_timeout => 36000

}

}

filter {

mutate {

remove_field => ["@version"]

remove_field => ["tags"]

}

}

output {

if [appname] == "elk-gateway" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "elk-gateway-log"

}

}else if [appname] == "elk-auth" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "elk-auth-log"

}

}else if [appname] == "elk-adm" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "elk-adm-log"

}

}else if [appname] == "elk-project" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "elk-project-log"

}

}else if [appname] == "elk-product" {

elasticsearch {

hosts => "http://elasticsearch:9200"

index => "elk-product-log"

}

}

stdout {}

}注意这里的 [appname] == “elk-gateway” 后面的名字是自定义的,但是在filebeat配置文件中需要一一对应起来,并且在fileBeat中添加了日志配置在这里也需要添加对应的标签才行。

到这里我们可以执行命令启动ELK服务了:

cd /usr/local/docker/elk

docker-compose up -d如果拉取镜像的时间过长:可以终止掉使用docker pull命令先将镜像拉取在本地后再启动(我当时直接启动耗费了很长时间)

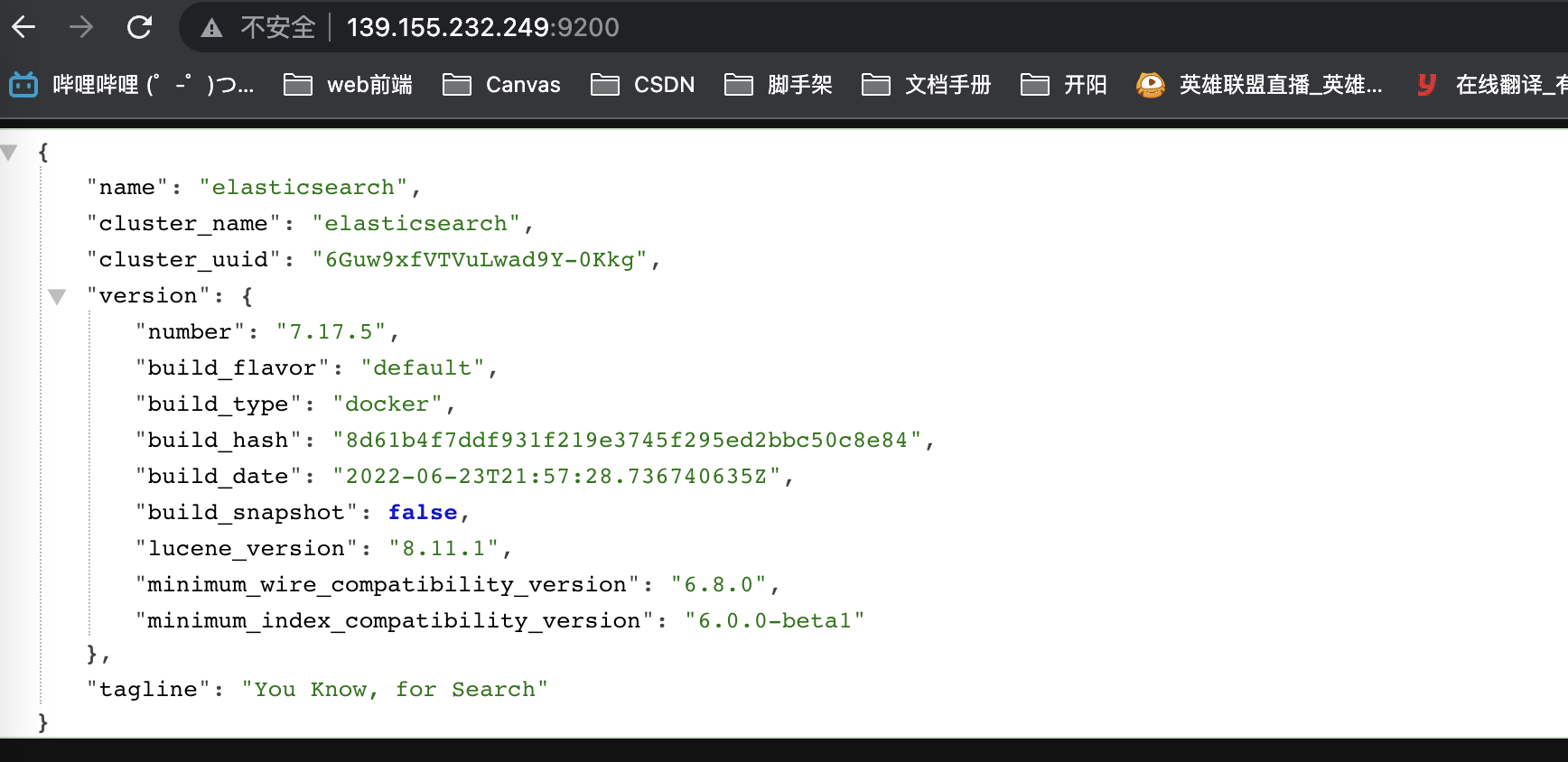

启动成功可已测试一下:

测试elasticsearch:

测试kibana

到此处:我们的ELK安装完毕

来到我们的运用服务器:47.94.xxx.xxx

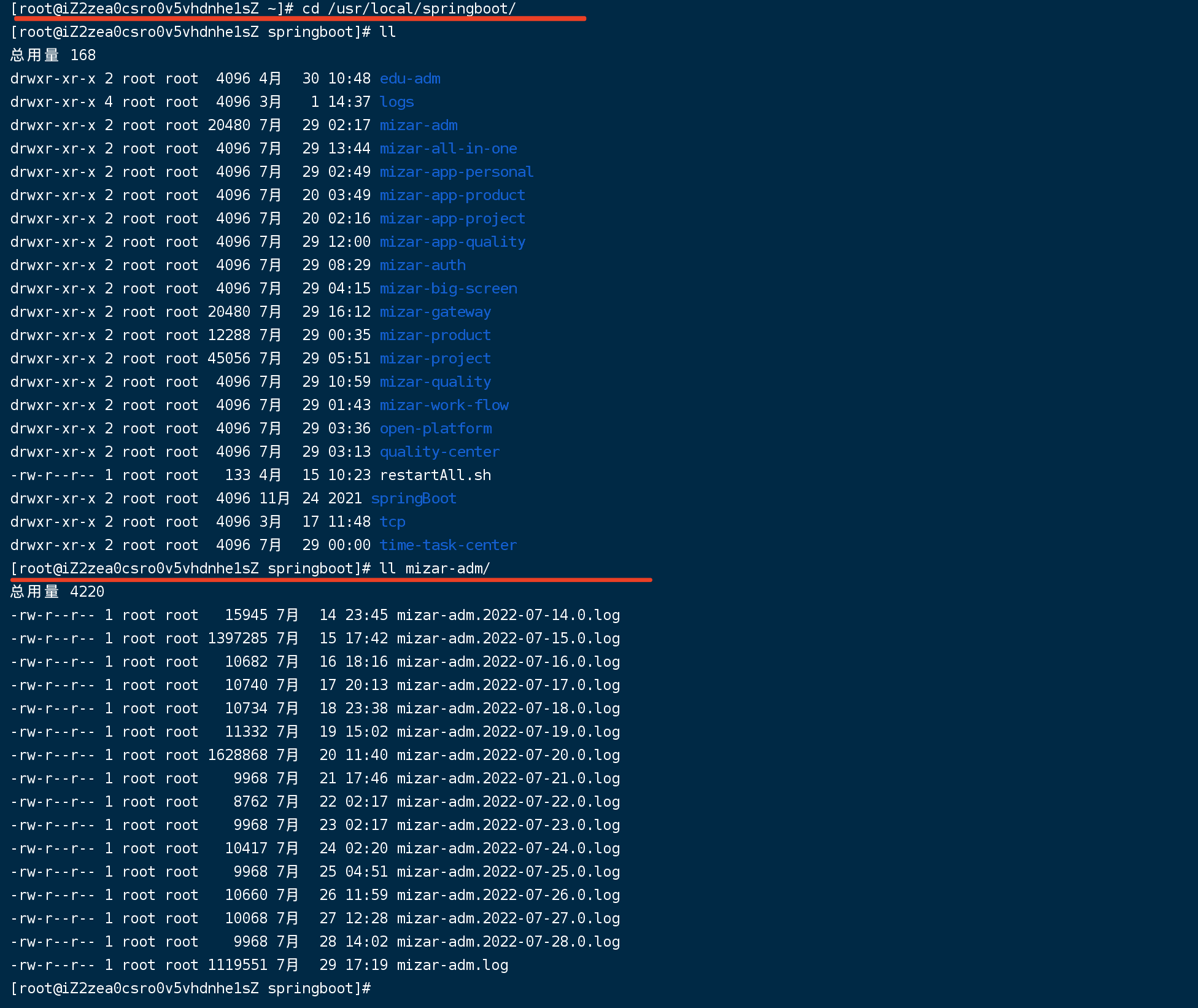

我的日志在:/usr/local/springboot/ 目录下;运用是通过docket镜像的方式部署

创建目录:

mkdir -p /usr/local/docker/filebeat/{conf,logs}

chmod 777 /usr/local/docker/filebeat/conf编写filebeat.yml文件

filebeat.inputs:

- type: log

enabled: true

paths:

- /logs/elk-gateway/elk-gateway.log

fields:

appname: elk-gateway

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/elk-auth/elk-auth.log

fields:

appname: elk-auth

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/elk-adm/elk-adm.log

fields:

appname: elk-adm

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/elk-project/elk-project.log

fields:

appname: elk-project

fields_under_root: true

- type: log

enabled: true

paths:

- /logs/elk-product/elk-product.log

fields:

appname: elk-product

fields_under_root: true

processors:

- drop_fields:

fields: ["log","input","host","agent","ecs"]

# 将日志输出到 logstash

output.logstash:

hosts: ['139.155.xxx.xxx:5044']注意这里的 paths: /logs/xxx/xxx.log 是容器内部的路径不是宿主机的日志目录

编写docker-compose.yml文件

version: "3.7"

services:

filebeat:

container_name: filebeat

image: elastic/filebeat:7.17.5

restart: on-failure

volumes:

- ./conf/filebeat.yml:/usr/share/filebeat/filebeat.yml

- /usr/local/springboot:/logs

environment:

--log-driver: json-file==这里将宿主机的日志目录 /usr/local/springboot 挂载到容器中去,这里很关键,如果配置错误,在kibana中看不见索引。==

然后启动镜像

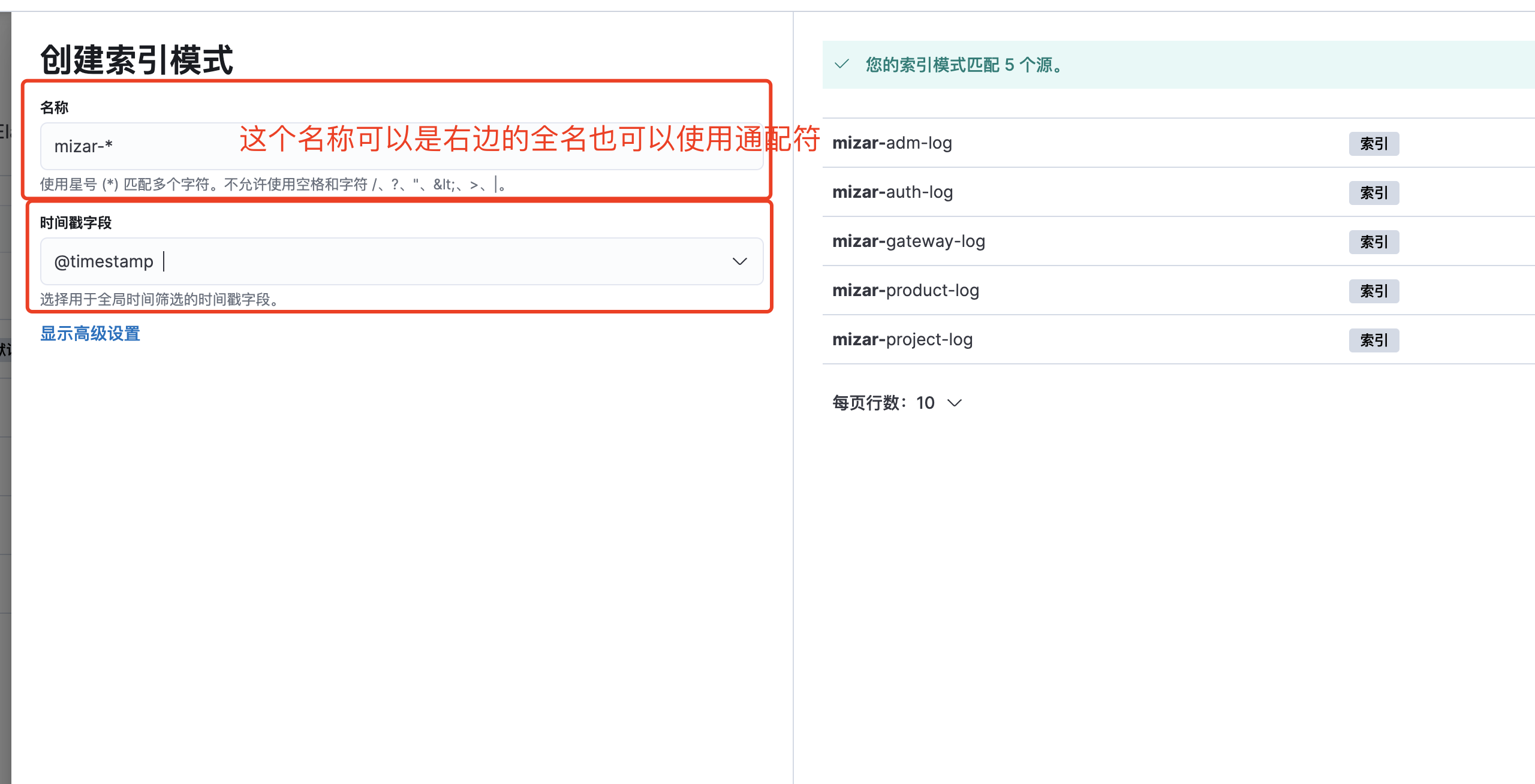

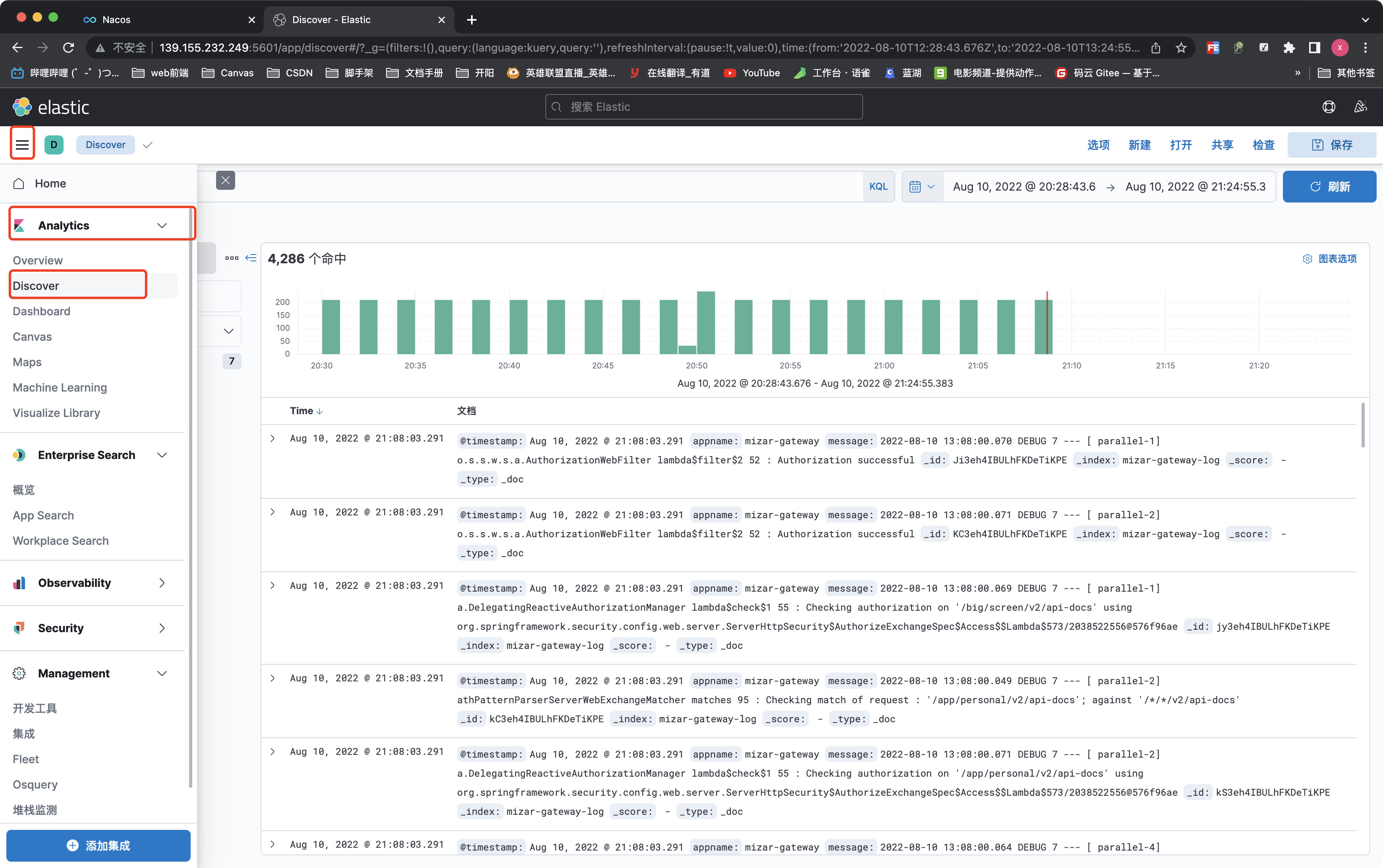

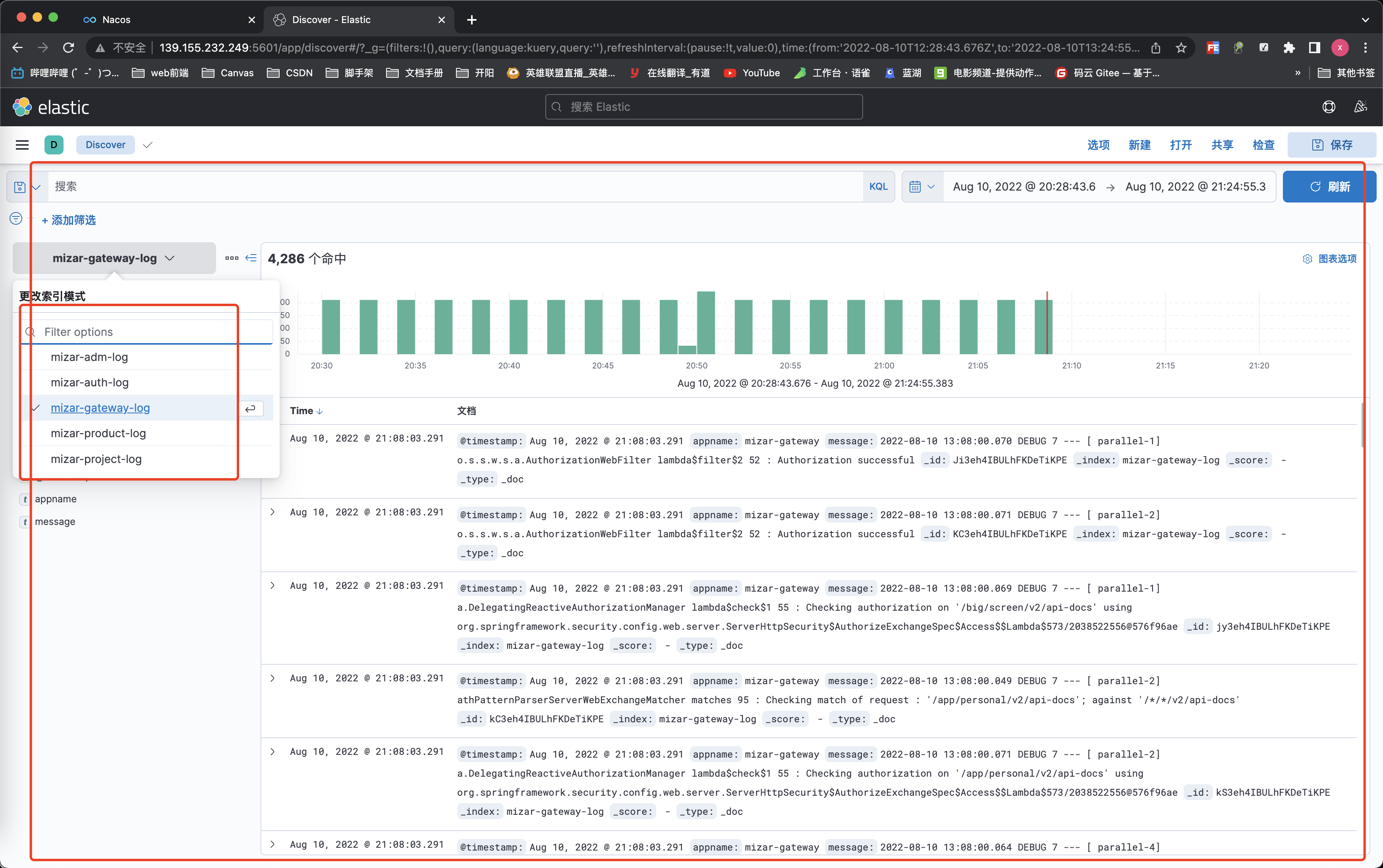

配置kibana

登录:http://139.155.xxx.xxx:5601/

后面根据图中步骤配置即可